Reconceptualizing Big Data

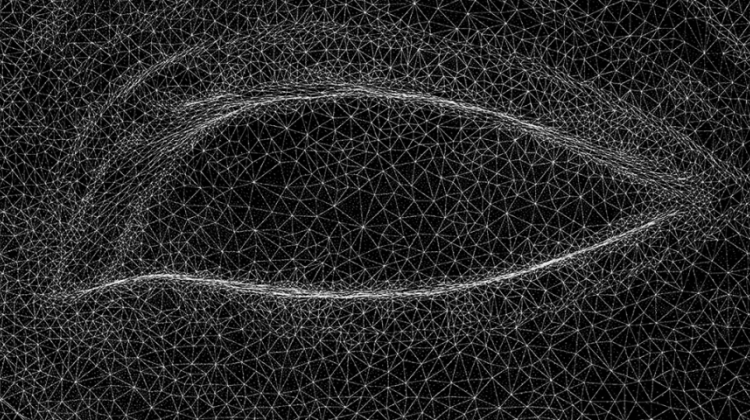

Disentangled No. 1, Nora Al-Badri and Jan Nikolai Nelles

Increasingly algorithmic processes are used by private and governmental actors to understand and craft interventions with the physical world. Through the lens of big data and data science, interventions are framed through an empirical lens, lending them an aura of impartiality and truth. Yet despite its empirical facade the world making of big data archiving is susceptible to a propensity to perpetuate systemic bias or singular perspectives of understanding the physical world it represents. Instances such as drone strikes and racial discrimination in facial recognition demonstrate this gap between intention and outcome.

In Uncertain Archives: Critical Keywords for Big Data (MIT Press, 2021), scholars and artists across a range of disciplines interrogate the vernacular and lexicon used to define and bound the study of big data to reflect on how we understand and assimilate the determination of knowledge through the lens of these terms. Uncertain Archives evolved from a series of conferences that examined the terms unknown, error, and vulnerability. The book was edited by Nanna Bonde Thylstrup, Daniela Agostinho, Annie Ring, Catherine D'Ignazio and Kristin Veel. Contributing authors include N. Katherine Hayles, Wendy Hui Kyong Chun, Johanna Drucker, Lisa Gitelman, Safiya Noble, Sarah T. Roberts and Nicole Starosielski.

“Uncertain Archives aims to intervene in the field of critical data studies by reconceptualizing and infusing new meaning into established terms such as latency and outlier,” said co-editor, Nanna Bonde Thylstrup at the book’s virtual launch on September 22nd, 2021. “Uncertain Archives also proposes the introduction of new terms and concepts, such as Copynorm and Hauntology, to make sense of big data in all its richness and diversity.”

After you have finished ordering a copy of Uncertain Archives, be sure to visit the links on the right to learn more about the editors, contributing authors, and artists as well as their relevant publications and work.